“In recent years, Artificial Intelligence (AI) has made significant strides in various industries, from healthcare to finance and beyond. The technology is being used for tasks such as diagnosing diseases, automating mundane jobs and even creating art. However, with these advancements come concerns about the future of jobs, privacy and ethics.”

The above sentences seem normal at first. They’re not necessarily exciting sentences, but they still make sense.

It might be surprising to learn that they were actually written by an AI program called Chat Generative Pre-trained Transformer (ChatGPT).

ChatGPT launched in November 2022. The chatbot allows users to write prompts or questions which the program will respond to.

To some, the idea that a program can write coherently like this is frightening. Others, however, welcome the new technology with open minds, prepared to find a way to work it into society.

In the classroom, while some professors are weary about ChatGPT, others are already thinking about ways to incorporate it into classes.

Nathan French, a professor of comparative religion at Miami University, created an account for the program and fiddled with it to see how well it worked.

French, who teaches Islamic studies, said he could see himself using ChatGPT to help assist students who don’t fully know Arabic yet, so they can work with harder-level Arabic texts while still learning the language. However, he doesn’t want the tool to become a replacement for learning the language.

“I think the challenge is going to be to demonstrate to students that they should be confident in their own thinking and coming to terms with what they don't know,” French said. “I worry that there will be those who run to AI because they want to just get the right answer. And as anyone in third grade will tell you, your teacher takes away your calculator because they want you to learn the steps.”

With the writing capabilities of the technology, some teachers like French are concerned that students might use the tool to plagiarize, having the program write papers or portions of papers for them.

Mack Hagood, an associate professor of media and communication, said he’s worried students might rely on the technology as a crutch when they are overwhelmed with work.

Enjoy what you're reading?

Signup for our newsletter

“Students are stressed out,” Hagood said. “When they get in that really stressed out mode and they're just concerned about the end result of a grade, that's when these temptations to use these kinds of tools are really powerful.”

Brenda Quaye, assistant director for academic integrity at Miami, is aware of these concerns and makes it clear that using AI to complete work without permission violates academic integrity.

“If a student is just kind of wholesale using some program or app to churn out work, and then they are submitting it, that would be considered academic dishonesty unless a faculty member has given a student specific permission to complete the assignment in that way,” Quaye said. “But more broadly than that, what we want both students and faculty members to think about is if the AI is going to be used, how should it be used?”

While Quaye said she hasn’t seen problems with ChatGPT yet, she doesn’t think it will be hard to tell if a student has used it based on problems with previous technologies.

“I've seen an increase in the usage of [paraphrasing generators], and it's really obvious … very odd word choices, words that have a very specific meaning that probably shouldn't be changed get changed,” Quaye said. “Just based on my knowledge of what ChatGPT churns out and what I've seen so far in the various conversations that I have had with colleagues, it'll probably be pretty similar that there'll be some telltale signs.”

OpenAI, the company that owns ChatGPT also launched a program Jan. 31 called the AI Text Classifier, which is able to detect how likely a group of text was generated using AI technology.

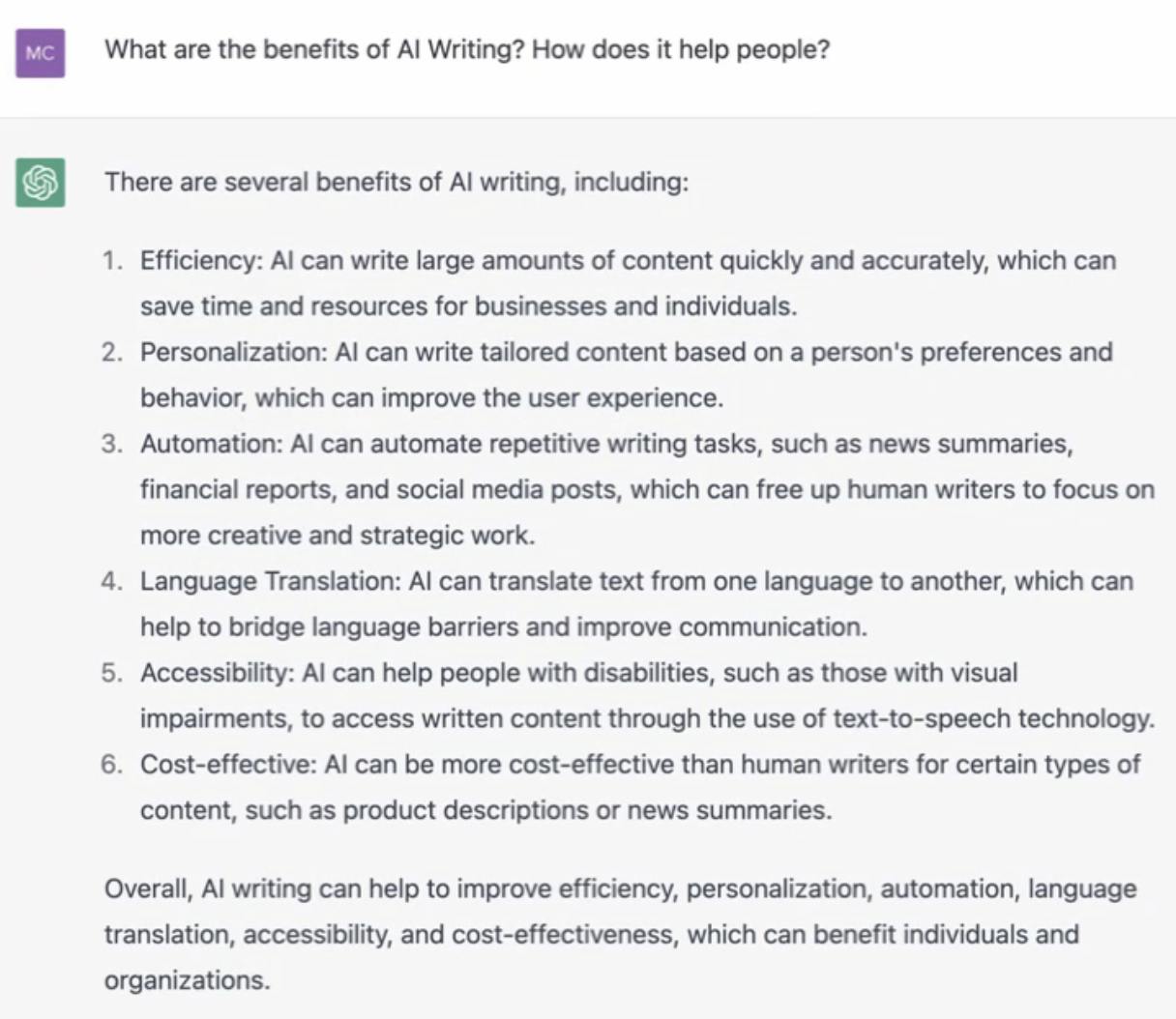

Heidi McKee and Jim Porter, professors of English, have studied AI programs together since their first research article on digital writing in 2008. McKee said although ChatGPT is good at giving information, it does not understand the information it’s giving — something she refers to as rhetorical context.

“They're able to write really well-crafted prose, no grammatical mistakes, unless you ask it to put some in, but ChatGPT, even as the developers acknowledge, has no actual idea of what it's saying, and it doesn't know the rhetorical context of the situation,” McKee said. “So it sometimes can say things that are wrong. It sometimes even within a single essay repeats itself in ways that even contradict itself.”

The use of AI programs can also vary based on class topics. While ChatGPT is able to respond to prompts based on information users give it, it can’t think for itself.

“What we've been labeling as AI lately, it's just a machine learning that's been branded as this thing that we're now calling AI,” Hagood said. “It's machine learning to recognize certain kinds of patterns and then extrapolate from them in cases where the objective is really narrow … Those are the things that we started … calling AI and then so it has the connotations of this Hal 9000 Artificial Intelligence.”

For assignments where critical thinking is required, ChatGPT might not be able to do students’ work for them. However, for classes in areas such as electrical and computer engineering, professors such as Peter Jamieson actually encourage the use of these programs, letting students use the tool to find answers to questions.

“For us when we teach things like programming or design, I see no reason why you w

ouldn't have a ChatGPT window always open just like you have your browser window to go search on Google,” Jamieson said.

Quinn Classen, a sophomore software engineering major, hasn’t seen ChatGPT or any other tools in his classes yet, but he worries about relying too heavily on AI.

“It really gets me excited about the future of technology,” Classen said. “But if we’re not careful enough to make sure that the human touch and things [are] maintained, then we run into a lot of issues … A human thinks about a billion things at any given time, for even the simplest task, which is what slows us down, but it also keeps us grounded.”

Aidan McLaughlin, a sophomore computer science, philosophy and math major, however, is more hopeful toward ChatGPT’s presence in the classroom.

“ChatGPT, I think, is the most powerful accelerant for learning that was put out in the last 10 years,” McLaughlin said. “It’s a stride similar to Google. I think in the classroom it has applications to help detangle students when they get stuck … It’s like having a best friend who is a world expert in every topic.”

French also serves on University Senate and said other senators have brought up concerns over the technology during meetings. French said although people should be cautious with using these new tools, they should also be willing to try incorporating them.

“My concern is those who would outright ban these instruments, those who would discourage their usage in any circumstance,” French said. “There's an argument to be made that they're going to be very helpful to future learners and future teachers and future researchers as well.”

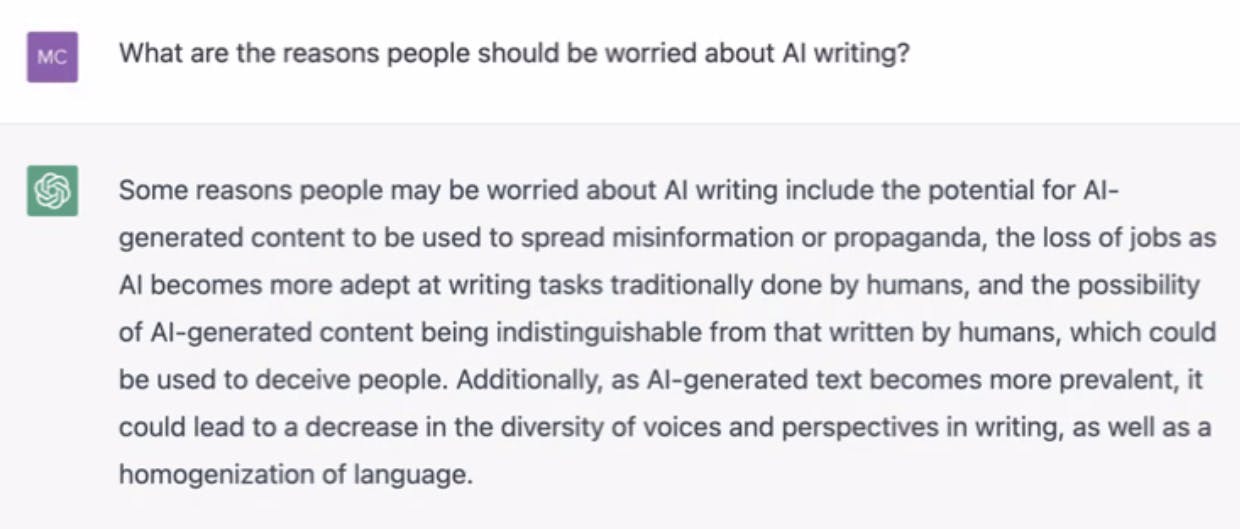

The discussion of AI use is not just confined to the world of education. In its other uses, testers have found some problems with the programs.

“They still have biases that are shaped into them from the data that's offered to them from the people who originally designed them,” McKee said. “For example … someone asked [ChatGPT] to write code to show the characteristics of an effective scientist, and ChatGPT immediately wrote: ‘true, white male.’”

Although ChatGPT pushes the boundaries of technology, its presence still worries many.

“Some people are afraid of AI because they think it's so smart, it's going to take over the world,” Hagood said. “I'm afraid of AI because it's so dumb.”